How to Deploy & Run StableLM

April 20, 2023Deprecated: This blog article is deprecated. We strive to rapidly improve our product and some of the information contained in this post may no longer be accurate or applicable. For the most current instructions on deploying a model like StableLM to Banana, please check our updated documentation.

Let's walkthrough how you can deploy and run the StableLM language model on serverless GPUs. Minimal AI knowledge or coding experience is required to follow along and deploy your own StableLM model.

This tutorial is easy to follow because we are using a Community Template. Templates are user-submitted model repositories that are optimized and setup to run on Banana's serverless GPU infrastructure. All you have to do is click deploy and in a few minutes your model is ready to use on Banana. Pretty sweet! Here is the direct link to the StableLM model template on Banana.

What is StableLM?

StableLM is the first open source language model developed by StabilityAI. It is available for commercial and research use, and it's their initial plunge into the language model world after they developed and released the popular model, Stable Diffusion back in 2022.

StableLM Deployment Tutorial

StableLM just dropped today. 👀

StableLM is @StabilityAI's first open-source language model.

here's how to run it in your terminal in minutes 👇🛠️ pic.twitter.com/mHsCvXJS8K

— Banana (@BananaDev_) April 20, 2023

``

StableLM Code Sample

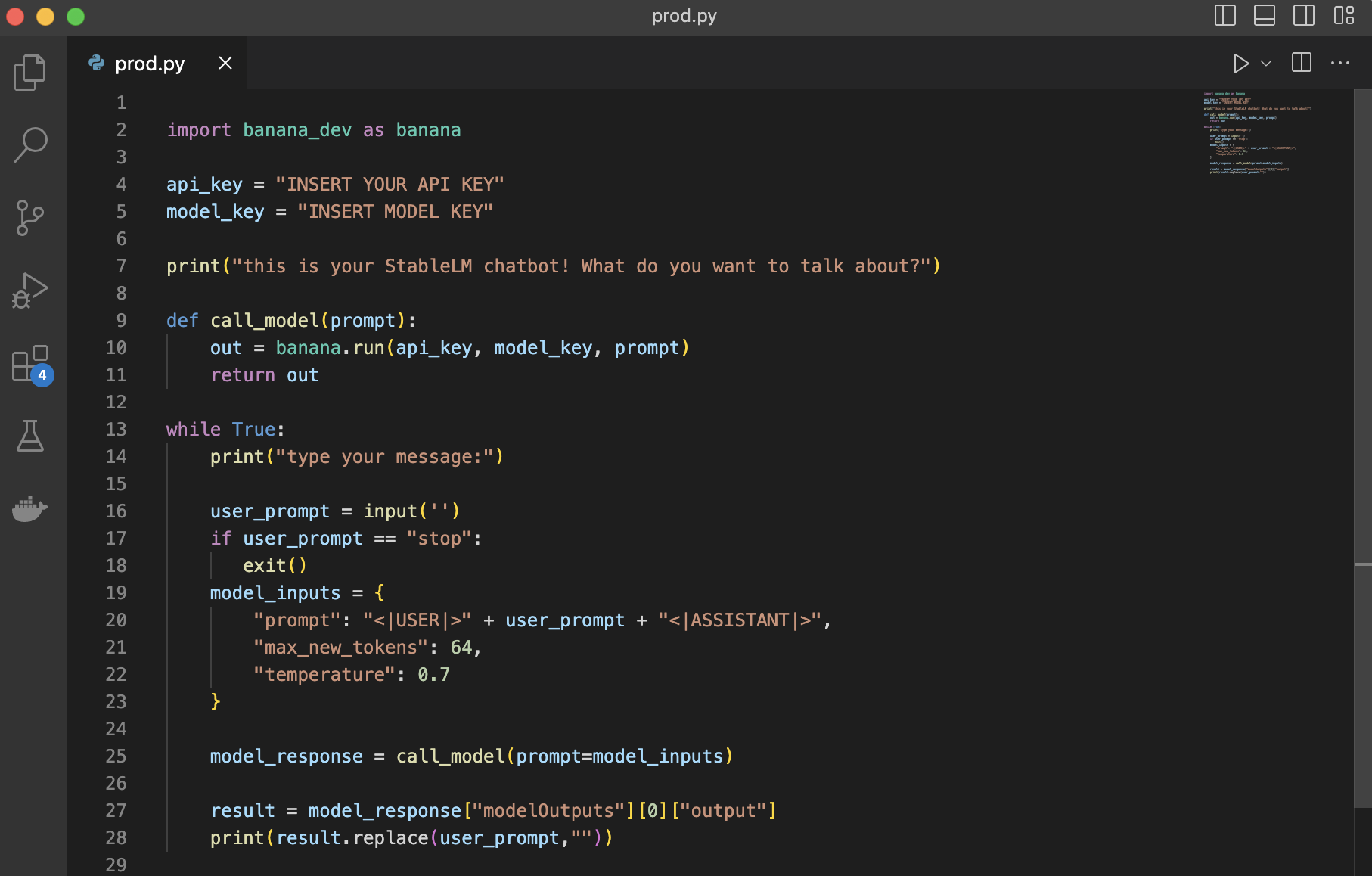

Copy and paste the code below. Make sure your formatting matches the screenshot shown here:

import banana_dev as banana api_key = "INSERT API KEY"

model_key = "INSERT MODEL KEY" print("this is your StableLM chatbot! What do you want to talk about?") def call_model(prompt):

out = banana.run(api_key, model_key, prompt)

return out

while True:

print("type your message:")

user_prompt = input('')

if user_prompt == "stop":

exit()

model_inputs = {

"prompt": "<|USER|>" + user_prompt + "<|ASSISTANT|>",

"max_new_tokens": 64,

"temperature": 0.7

}

``

model_response = call_model(prompt=model_inputs)

result = model_response["modelOutputs"][0]["output"] print(result.replace(user_prompt,""))

Wrap Up

Reach out to us if you have any questions or want to talk about StableLM. We're around on our Discord or by tweeting us on Twitter. What other machine learning models would you like to see a deployment tutorial for? Let us know!